Masking and Scheduling LoRA and Model Weights

As of Monday, December 2nd, ComfyUI now supports masking and scheduling LoRA and model weights natively as part of its conditioning system. It is compatible with all models. The update also has an extensive ModelPatcher rework and introduction of wrappers and callbacks to make custom node implementations require less hacks, but this blog post will focus solely on how to mask and schedule LoRA and model weights. There will be a separate post at a later date aimed more at custom node developers about the other features.

TL;DR new features:

Masking LoRA and Model Weights

Scheduling LoRA and Model Weights

Conditioning Helper Nodes

Expanded ModelPatcher system for developers (not covered in this post)

Masking will be explained first, then scheduling.

Masking

Different weights in different places.

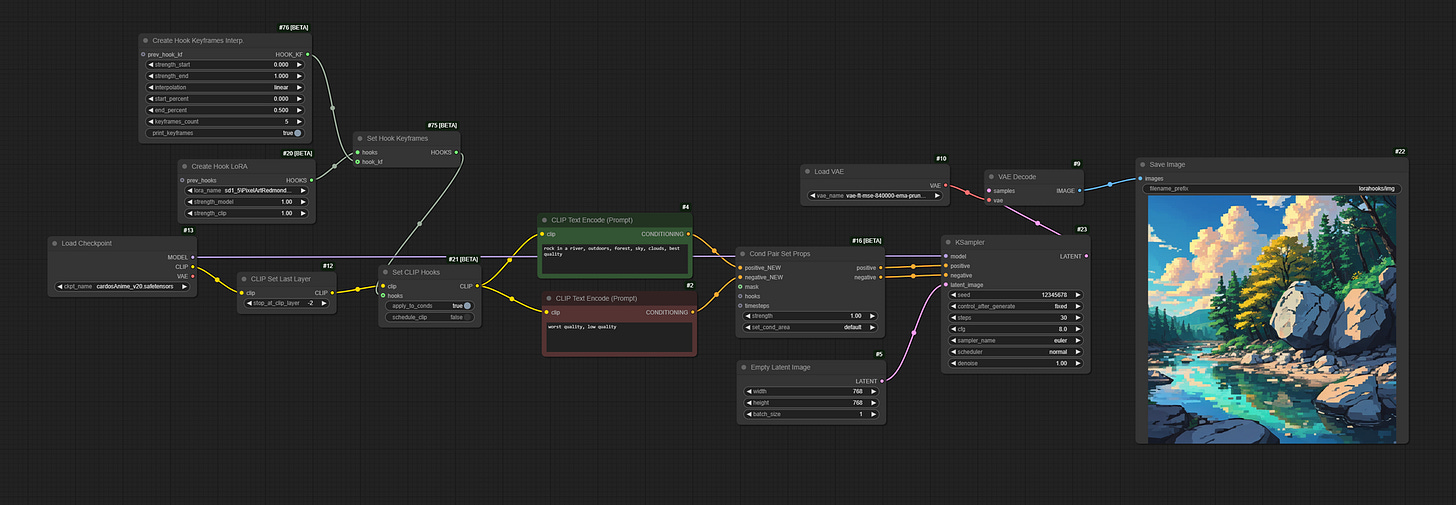

For the sake of knowing what you're getting into, here is the full workflow used to make to make the image on the top of this page; it does not do any scheduling to keep things simple, and masks both LoRA and model weights:

Normally, you'd use Load LoRA nodes or custom nodes' equivalent to apply a LoRA to the whole model. While the system introduced in this update works seamlessly with existing Load LoRA nodes, the new nodes allow you to hook specific LoRAs and model weights freely to CLIP and conditioning. The key loader nodes are 'Create Hook LoRA' and 'Create Hook Model as LoRA' nodes.

Let's focus on the top branch of that workflow to get an idea of how these nodes work:

First, the Create Hook LoRA node loads the LoRA weights, as you would expect from the existing Load LoRA node. However, it does NOT immediately register the weights on the CLIP and model, which is why there is no model/clip inputs or outputs. These are just the weights that can now be 'hooked' to specific CLIP and conditioning.

A quick clarification on what is CLIP, conditioning, and model:

For txt2whatever models, CLIP is used to turn (encode) text into stuff (conditioning) that the actual diffusion model (model) interprets into what becomes the output.

So, text going through [CLIP] gives you [conditioning], and [conditioning] going through the [model] gives you your output.

[CLIP] and the [model] are totally separate - after the CLIP Text Encode nodes, CLIP is done with its thing, leaving only the [conditioning] for the [model] to deal with.

The [model] doesn't do diffusion until it performs sampling, so the KSampler node in this workflow is where that happens. The [conditioning] is the stuff that tells the [model] what it should do when it sees it.

The purpose of this explanation is that CLIP and the core model are two separate things, so the weights for each are different, and thus LoRAs/models may contain both CLIP weights (affect CLIP) and 'model' weights (affect model for diffusion).

Set CLIP Hooks makes the output CLIP consider the CLIP weights that are in the CLIP hook whenever it will get around to performing Text Encode, as if a Load LoRA was attached. When 'apply_to_conds' is set to True (default), it means that the nodes that perform Text Encode will automatically attach the same hooks that were on the CLIP to the conditioning that comes out of them. Is this necessary? No - you could manually attach the hooks later to the conditioning and get the same result. But it makes it less likely that you accidentally forgot to hook both the CLIP and conditioning, if you wish to use both CLIP and model weights. You can purposely not use Set CLIP Hooks node if you do not wish to use any CLIP weights, you'd just need to attach the hooks to the conditioning later. We will ignore 'schedule_clip' toggle until the scheduling section.

So, what this branch of nodes does is loads the CLIP + model weights (assuming both exist) from the LoRA, sets those hooks onto the CLIP to tell it to use them whenever it does text encoding, and the apply_to_conds toggle lets it know to also automatically mark the output conditioning with the same hooks that were passed into Set CLIP Hooks.

Before talking about Cond Pair Set Props and related nodes, let's now jump down to the bottom branch:

Everything here is the same (generally) as the top branch, but it uses the Create Hook Model as LoRA node instead of the Create Hook LoRA node. Basically, at strength_model=1.0, the base model will use the same weights as the model specified here (target model), and same for the clip with a corresponding strength_clip. At a strength of 0.0, the base model's weights will not be modified. Any value between 0.0 and 1.0 will use the weighted average of the base model's and the target model's weights. Weights greater than 1.0 and less than 0.0 can be used too like for normal LoRAs, although the weight in general can be interpreted as

base_model*(1-strength) + target*strength

This allows you to do some powerful on-the-fly merging of model weights. It loads the CLIP and model weights from the target model, and then stores them as a LoRA hook. We've already gone over the implications of that when discussing the top branch.

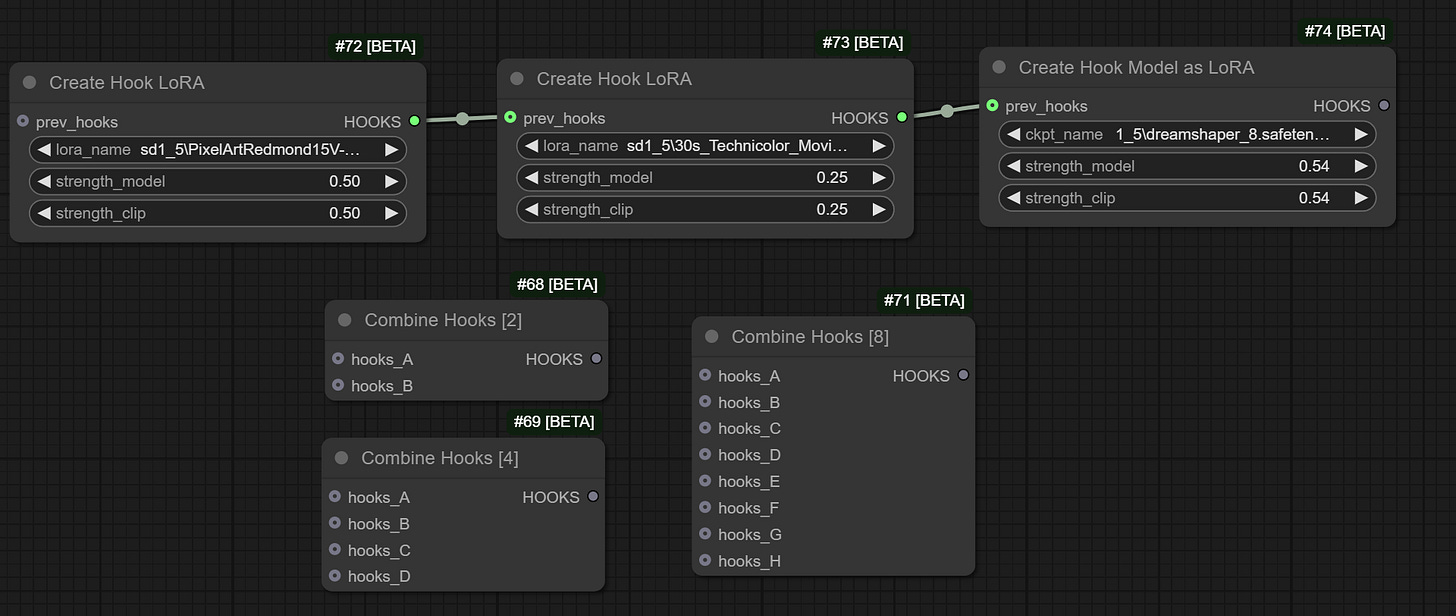

Both Create Hook Model as LoRA and Create Hook LoRA nodes have an optional 'prev_hooks' input - this can be used to chain multiple hooks, allowing to use multiple LoRAs and/or Model-as-LoRAs together, at whatever strengths you desire. There are also some manual Combine Hooks nodes, to combine different chains of hooks that might want to be used in different places without creating brand new chains, etc.:

Now, let's look at Cond Pair Set Props, and its related nodes, and what it's there for.

If you count all the conditioning we have now from the top and bottom branches, we have 4 in total - 2 for the positive conditioning, and 2 for the negative. And if you look over at the KSampler node, we only have room for 2 conditionings - 1 positive, 1 negative. We have to somehow make it fit by telling it, "hey, here is all the positive conditioning, and here is all the negative conditioning".

The solution is to combine the same type of conditioning - positive with positive, negative with negative - before it gets passed into the KSampler node.

Technically, there already was a native node that can do this, Conditioning (Combine), but it only works on one conditioning at a time. Perfect for something like flux which most often does not make use of CFG (so no negative prompt is needed), but when you need both positive and conditioning, here is how that looks:

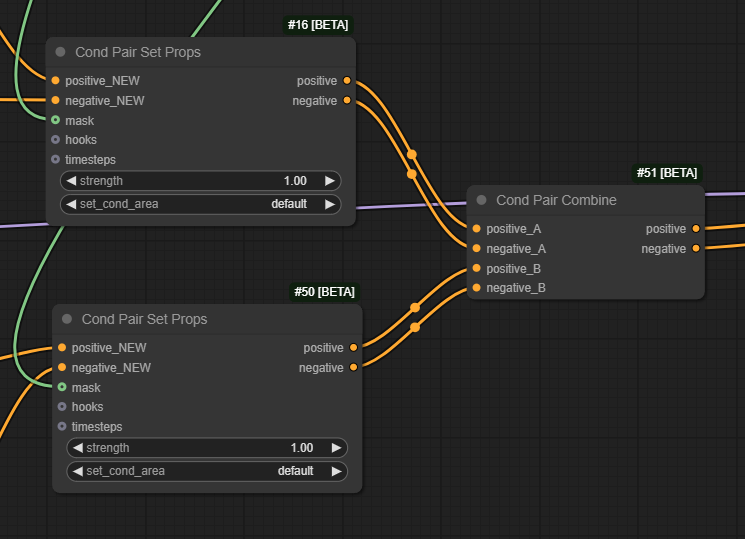

It quickly becomes a bit much - if you accidentally combine positive with negative, you will get completely unintended results. So, one of the new nodes is the Cond Pair Combine node:

No wires cross this time, and it's clear where the noodles should go. But, we haven't assigned any masks yet, and this is the masking portion of the tutorial after all. As before, there just so happens to be a native node that can assign a mask to a conditioning, but just one conditioning at a time. And maybe you'd want to assign hooks manually to conditioning as well, or have that conditioning only apply for a portion of the sampling process. That is where the Cond Set Props and Cond Pair Set Props nodes come in:

Cond/Cond Pair Set Props simply combine the abilities of Conditioning (Set Mask) and ConditioningSetTimestepRange nodes along with being able to add any additional hooks (or to add some to begin with, if Set CLIP Hooks node was not used or apply_to_conds was toggled to False). If a mask, hooks, or timesteps input is not given, it will not modify the corresponding previous values. Note, hooks are additive - you cannot replace the hooks that are already there, whatever is passed into here will be added to the list of hooks to apply. If you apply hooks that are already present on the conditioning, no worries - it will not double apply the weights.

If you'd like to mask conditioning, all conditioning must have a mask applied (or be defined as default, more on this later). A conditioning without a mask is treated the same as if it should apply to the whole image, which will give unintended results, as if no masking was done at all in most cases. If you feel like masking is not working, double check that all conditioning has some masks assigned. With only two pairs of conditioning, an easy way to ensure all areas are covered by both masks is to simply invert the mask used for one and use that inverted mask for the other.

Now that we have conditioning with the proper hooks and masks applied, we need to combine them. Cond Pair Combine is perfectly capable of doing this, and is totally fine to use:

However, if you happen to have more masked conditionings, it can become tiresome to combine them all - here is what happens if you happen to have 4 pairs of conditioning, let's say for 4 different masks in total:

Half the nodes on screen are just combining the conditioning, using 7 nodes to do what you'd yearn for just those 4 nodes to accomplish. And so, the Cond/Cond Pair Set Props Combine nodes come into play:

It performs the actions of both - the mask/hooks/timesteps inputs only affect the inputs marked with _NEW (same as for Cond Pair Set Props node) and then combines it with the other positive/negative inputs. Exact same behavior as what the node setup further up did, except with 4 nodes.

Returning to the provided example workflow, with the conditioning combined and masks applied, we can pass the conditioning into the KSampler node to let it do its thing - it will automatically apply the desired hooks for the corresponding conditioning.

When working on masks, especially when you want to apply more than just 2, it may become a chore to make sure every pixel is accounted for. You know there is an area in the image that received no conditioning when you get beige output like this:

This is what the Cond/Cond Pair Set Default Combine nodes are for; they will automatically apply whatever conditioning is provided to them, at sampling time, to any areas that they find have not been assigned anything at all. In our example, we could skip inverting the mask for the 2nd pair of conditioning by using this node:

Just as with the Cond Pair Set Props Combine node, the optional hooks input here is to add any additional hooks to the positive/negative_DEFAULT conds. Having this node plugged in when all areas are accounted for will NOT impact performance - it will simply be ignored at sampling time if it finds nowhere to apply it. For this reason, if your masks are intricate and/or numerous, it's a good idea to have this node connected with a good neutral prompt in case you miss some areas and want to avoid the beige of nothingness. Or you can use it as an easy way to replace what would be the final 'mask' that subtracts all other masks/areas from it. Very handy.

Now, to see what we actually got with this setup, let's switch to a harsher mask instead of a gradient:

Without the gradient, it's clear where each hook is applied. Interestingly, the diffusion models naturally bring the styles and content together. You may be surprised by what masking weights can do, especially when used for character or style LoRAs.

That pretty much covers it for masking, and how conditioning generally works for ComfyUI. You can add some ControlNets at the end of your conditioning chain to apply it to all conditioning or apply it to only select conditioning if you so wish.

Here are the masks I used while creating this tutorial:

Scheduling

Different weights at different steps.

Same as with masking, let's start with a simple workflow to analyze:

To handle scheduling weight strengths, we make use of Hook Keyframes. They are comprised of two pieces of information:

strength_mult (what to multiply the strength of the LoRA hook by)

start_percent (when the keyframe should take effect during sampling)

The start_percent, like with other ComfyUI nodes, is a decimal between 0.0 and 1.0 that corresponds to the relative step that the keyframe's strength_mult should be applied. For example, if you have 30 sampling steps, start_percent 0.5 would mean it gets applied at step 15. A start_percent of 0.0 would mean it gets applied from the very beginning of sampling.

Important note: before the linked commit below on December 5th, there was a bug where Flux and similar models that used a shifted sigma schedule did not appropriately return an accurate relative percent, whether that be for hook keyframes or ControlNet scheduling. Some sampling schedulers may also potentially make the percents not line up properly with the expected steps.

Here is an example of two Hook Keyframes creating a schedule where from the very start (start_percent=0.0), the strengths set in the Create Hook LoRA node will be multiplied by 0.5, then in the middle (start_percent=0.5), the original strengths will be multiplied by 1.0 instead (meaning back to their original state). Note: strength at start_percent=0.0 is assumed to be 1.0 unless specified otherwise by a keyframe:

Each keyframe will require the weights to be recalculated at sampling time, so the more keyframes you have, the more little 'hiccups' in sampling speed you'll notice for those steps; for this reason, apply keyframes conservatively. Keyframes can be chained together via the prev_hook_kf inputs. Internally, hook keyframes sort themselves by their start_percent, so the order you plug them in will not make a difference unless you assign multiple of the same start_percent.

Making schedules by hand like this when you want to quickly change the spacing between values is annoying. So, there are two helper nodes to assist: Create Hook Keyframes Interp. and Create Hook Keyframes from Floats:

Create Hook Keyframes from Floats will take in an input of type FLOATS (currently only AnimateDiff-Evolved's Value Scheduling node outputs this type, but more nodes will begin using this to differentiate themselves from FLOAT), and space them out evenly based on the number of inputs given. Say you have 5 floats passed in. It will put the first at start_percent=0.0 with the strength being the value of that first float, the second at start_percent=0.25 with strength being the value of the second float, and so on until the last value being placed at start_percent=1.0.

For more simple schedules that you want to just create without worrying about manually inputting values, you can use the Create Hook Keyframes Interp. node. Some key things:

strength_start is the strength of the first keyframe to be created

strength_end is the strength of the last keyframe to be created

start_percent is the start_percent of the first keyframe created

end_percent is the start_percent of the last keyframe created

keyframes_count determines how many total keyframes to create, including the start and end keyframes

interpolation determines how to scale the strengths in between; the start_percents of the keyframes will remain evenly spaced (linear) no matter the selection.

The print_keyframes toggle is useful to see the actual values assigned to the keyframes in the console:

If you look in the provided example, I've made the end_percent 0.5 instead of the default 1.0. This results in these keyframes:

This means that instead of reaching 1.0 by the end of sampling, it reaches it much earlier halfway through.

Hook keyframes can be assigned to hooks via the Set Hook Keyframes node:

This node will overwrite the schedule on the hooks provided to it. If you wish to give different hooks unique schedules without overwriting those of previous hooks, you can use the Combine Hooks nodes mentioned in the masking section:

Returning to the example scheduling workflow, we can now talk about the schedule_clip toggle on the Set CLIP Hooks node we skipped in the masking section. When schedule_clip is set to False, scheduling weights will ONLY apply to conditioning [model], not CLIP; instead, CLIP will only use the original strength_clip defined in the Create Hook LoRA/Model as LoRA nodes. When set to True, conditioning will be created for each scheduled CLIP weight; depending on how many keyframes you have, you will notice the CLIP Text Encode nodes take longer to run (and a progress bar will appear on the node). The results are not always better if you schedule CLIP, so that is why it is off by default to avoid the extra compute time, but feel free to set it to True and try it out.

And that is basically it for this workflow. The Cond Pair Set Props is completely unnecessary - I only left it in case you'd like to use it to assign some extra hooks to apply to only the conditioning and not CLIP, but removing it will not change the results at all, as long as you have apply_to_conds set to True.

What's the purpose of scheduling LoRAs/Model-as-LoRAs?

Some models and LoRAs may produce the style you want, but not the composition or variety that you desire, or vice versa. Earlier steps in sampling determine overall composition and layout, so you could schedule the LoRAs/models to run at the times their features are desired.

Let's use the example workflow as an example. Here is the comparison between using just the base model, the scheduled lora, and unscheduled lora:

The scheduled result maintains the composition of the base model, while still applying the stylization. The schedule and strengths can be tweaked to get the results you desire.

Can you combine masking and scheduling?

Yes.

lorahooksmaskingscheduling.json

Just a combination of both workflows.

And that's about it. Excited to see the things people use this for.

This is interesting. I worked on a flux region spatial control pipeline. I am trying to apply Lora to region-specific flux attention; however, the current system applies to the entire attention. I will study this in more detail. The regional solution I devised uses BBOX and Masking and feathering at the attention level, and we override the flux attention. Since this is now built into Comfy, I can try and adapt the custom pipeline to apply Lora to specific regions in Flux. Technically it should work using the technique showcased here.

So what's the difference between Create Hook LoRA v.s. Create Hook Model as LoRA?