Intel OpenVINO Joins Forces with ComfyUI to Enhance AI Creation Efficiency

Intel OpenVINO toolkits (https://openvino.ai/), renowned for its ability to optimize and deploy AI models on Intel hardware, has recently partnered with the AI workflow platform ComfyUI. This collaboration integrates OpenVINO's powerful capabilities into ComfyUI through a recently merged OpenVINO node pull request (PR), delivering significant workflow efficiency improvements for creators using Intel hardware.

Through the collaborative work of CPU, GPU, and NPU, Intel processor hardware provides developers with a high-performance, low-power, and flexible AI development platform. The OpenVINO toolkit simplifies the model optimization and deployment process, supporting a wide range of AI frameworks. Developers can significantly enhance AI application performance while maintaining efficient resource utilization and low energy consumption.

Collaboration Details

This PR merge introduces a new OpenVINO node to ComfyUI, enabling users to directly leverage OpenVINO's inference engine within ComfyUI workflows. This node fully utilizes Intel's hardware acceleration, supporting optimized performance on CPU, GPU, and NPU. Through OpenVINO's model quantization and hardware tuning technologies, users can deploy AI models in ComfyUI more efficiently, achieving optimized performance without complex configurations. ComfyUI is renowned for its local GPU inference performance optimization and resource management, including support for consumer-grade graphics cards with low VRAM. This feature marks a significant step in expanding ComfyUI's supported hardware ecosystem and represents a key milestone in the technical integration of both platforms.

User Benefits

For creators using Intel hardware, this integration offers significant practical advantages. The OpenVINO node significantly reduces inference time and latency, enabling faster generation of high-quality images and videos in ComfyUI. Additionally, the node optimizes resource utilization, making it ideal for handling large-scale projects or users requiring real-time computing. Whether using the CPU, GPU, or NPU of Intel’s latest AI PCs, creators can experience smoother and more efficient operations, fully unleashing the potential of their hardware. Notably, with Intel chips powering 75% of the PC market, most users can directly benefit from this optimization without needing to upgrade their hardware..

Usage Guide

Follow these steps to activate the OpenVINO node in ComfyUI:

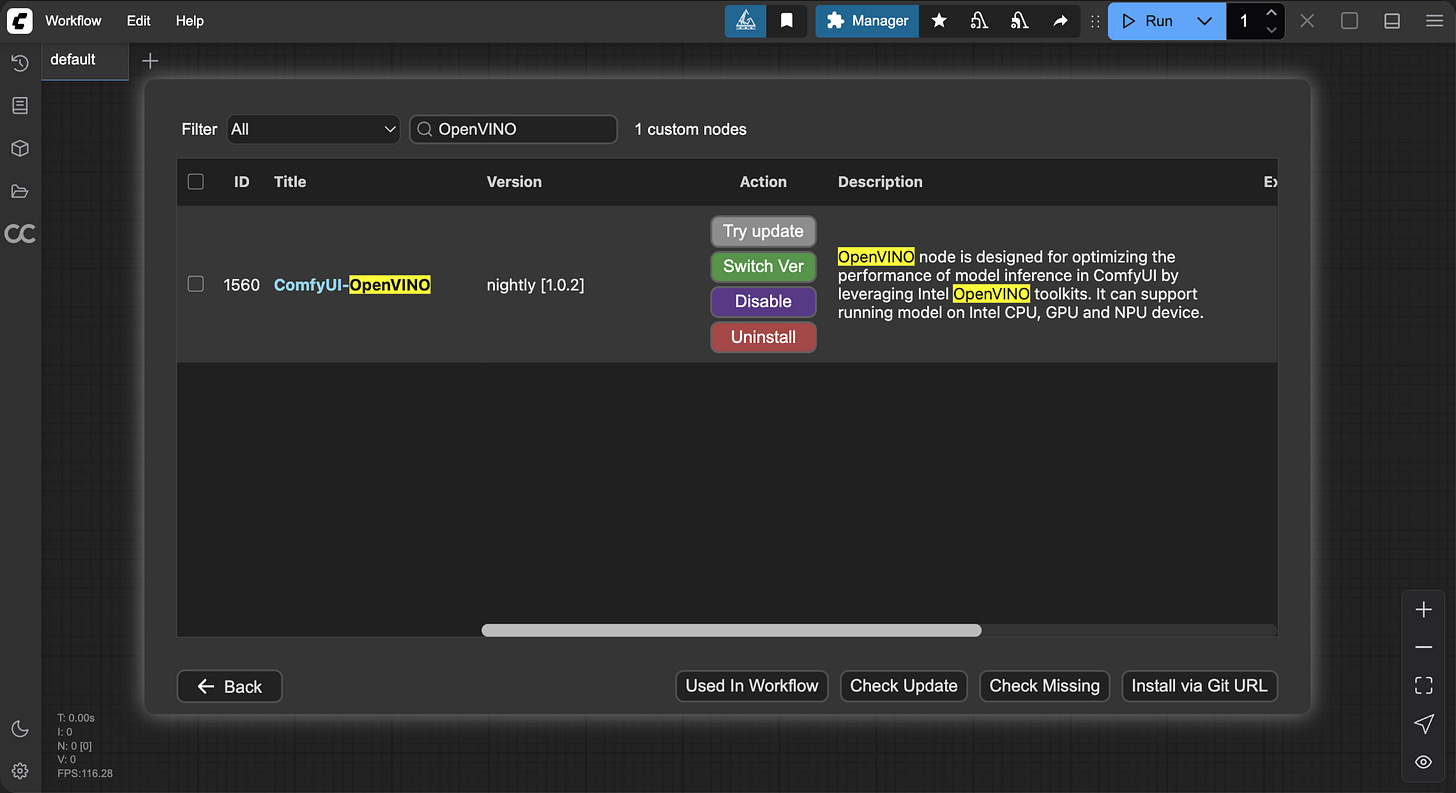

Install the OpenVINO Node

In ComfyUI-Manager, search for theComfyUI-OpenVINOnode.Add ComfyUI launch Parameters

python3 main.py --cpu --use-pytorch-cross-attentionPortable version:

.\python_embeded\python.exe -s ComfyUI\main.py --cpu --use-pytorch-cross-attention --windows-standalone-build pauseLoad any workflow

Connect the

OpenVINOnode to any Model or LoRA loader. The OpenVINO node will provide a list of available deployment hardware options based on your system configuration.Run the workflow. Since the OpenVINO node adapts the original model in ComfyUI via the Torch Compile mechanism, a warm-up compilation is required after switching models, which affects the time cost of the first inference. This is a one-time process. Once completed, you will experience the outstanding performance OpenVINO brings to the Intel platform.

Community Impact

The collaboration between OpenVINO and ComfyUI not only enhances platform performance but also brings convenience to a broader AI creation community. Local inference and hardware compatibility are key strengths of ComfyUI. By optimizing AI content generation efficiency on Intel hardware, this partnership provides creators—from hobbyists to professionals—with more hardware choices and new possibilities. It promotes the inclusive development of the AI ecosystem, allowing hardware-specific optimization solutions to be easily integrated into popular platforms like ComfyUI. With the rapid growth of the AI PC market—expected to reach 100 million Intel AI PC shipments globally by the end of 2025, with 30% of those in China—this collaboration will have a profound impact worldwide, especially in the important Chinese market.

Summary and Outlook

The integration of the OpenVINO node into ComfyUI is a significant milestone in AI content creation. Intel’s optimization expertise combined with ComfyUI’s user-friendly interface sets a new benchmark for efficiency and performance for Intel hardware users. Looking ahead, as both parties continue to innovate, the AI community can expect more exciting technological advancements, further pushing the boundaries of creative expression.

About Intel OpenVINO

Intel OpenVINO (Open Visual Inference and Neural Network Optimization) is an open-source deep learning toolkit developed by Intel, focused on optimizing and deploying AI inference to fully leverage the performance of Intel hardware (CPU, GPU, NPU). Supporting multiple model formats such as PyTorch and ONNX, OpenVINO employs techniques like INT4/INT8 quantization and speculative decoding to reduce first-token latency and enhance inference efficiency. Integrated with ecosystems like Hugging Face, ModelScope, and Ollama, OpenVINO is widely used in large language models (LLMs), image generation, and multimodal tasks, enabling efficient, localized AI applications on AI PCs and edge devices.

Github: https://github.com/openvinotoolkit/openvino

Hugging Face: https://huggingface.co/OpenVINO

Modelscope: https://www.modelscope.cn/openvino

Docs: https://docs.openvino.ai/

Where do you modify ComfyUI’s launch parameters in Windows 11 desktop app? I can’t find anywhere to modify it.

I keep getting is 'invalid for input of size' error when using the node T_T