LTX-2 is natively supported in ComfyUI on Day 0

LTX-2 delivers high-quality visual output while maintaining good resource and speed efficiency.

Hi community! We’re excited to announce that LTX-2, an open-source audio–video AI model, is now natively supported in ComfyUI!

LTX-2 delivers high-quality visual output while maintaining good resource and speed efficiency. The model synchronously generates motion, dialogue, background noise, and music in a single pass, creating cohesive audio-video experiences. It is easily customizable within an open, transparent framework, giving developers creative freedom and control.

Model Highlights

LTX-2 brings synchronized audio-video generation capabilities to ComfyUI, creating cohesive experiences where motion, dialogue, background noise, and music are generated together in a single pass. The model brings dynamic scenes to life with natural movement and expression, while offering flexible control through multiple input modalities. It runs efficiently on consumer-grade hardware.

Open-source audio-video foundation model

Generates motion, dialogue, SFX, and music together

Canny, Depth & Pose video-to-video control

Keyframe-driven generation

Native upscaling and prompt enhancement

Example Outputs

Text to Video

Example 1:

A close-up of a cheerful girl puppet with curly auburn yarn hair and wide button eyes, holding a small red umbrella above her head. Rain falls gently around her. She looks upward and begins to sing with joy in English: "It's raining, it's raining, I love it when its raining." Her fabric mouth opening and closing to a melodic tune. Her hands grip the umbrella handle as she sways slightly from side to side in rhythm. The camera holds steady as the rain sparkles against the soft lighting. Her eyes blink occasionally as she sings.

Example 2:

A man in a black tuxedo stands motionless in a small, red-tiled bathroom, facing a mirror. The camera sits just behind his right shoulder, framing both his back and his solemn reflection. Suddenly, he opens his mouth and begins to sing opera in Italian: "La donna è mobile, qual piuma al vento." Rich, resonant notes echo through the space. As his voice climbs in pitch, his brows lift, and his expression becomes more passionate, almost vulnerable. The overhead lighting casts a sharp glow on his face and tuxedo, reflecting in the glossy red tiles around him. The camera is static

Image to Video

Example 1

Input

A close-up shot of a young waitress in a retro 1950s diner, her warm brown eyes meeting the camera with a gentle smile. She wears a black polka-dot dress with an elegant cream lace collar, her reddish-brown hair styled in an elaborate updo with delicate curls framing her freckled face. Soft, warm light from overhead fixtures illuminates her features as she stands behind a yellow counter. The camera begins slightly to her side, then slowly pushes in toward her face, revealing the subtle rosy blush on her cheeks. In the blurred background, the soft teal walls and a glowing red "Diner" sign create a nostalgic atmosphere. The ambient sounds of clinking dishes, distant conversations, and the gentle hum of a jukebox fill the air. She tilts her head slightly and says in a friendly, warm voice: "Welcome to Rosie's. What can I get for you today?" The mood is inviting, timeless, and full of classic American diner charm.Example 2

Input

Control to Video

Getting Started

Update your ComfyUI to the nightly version(Desktop and Comfy Cloud will be ready soon)

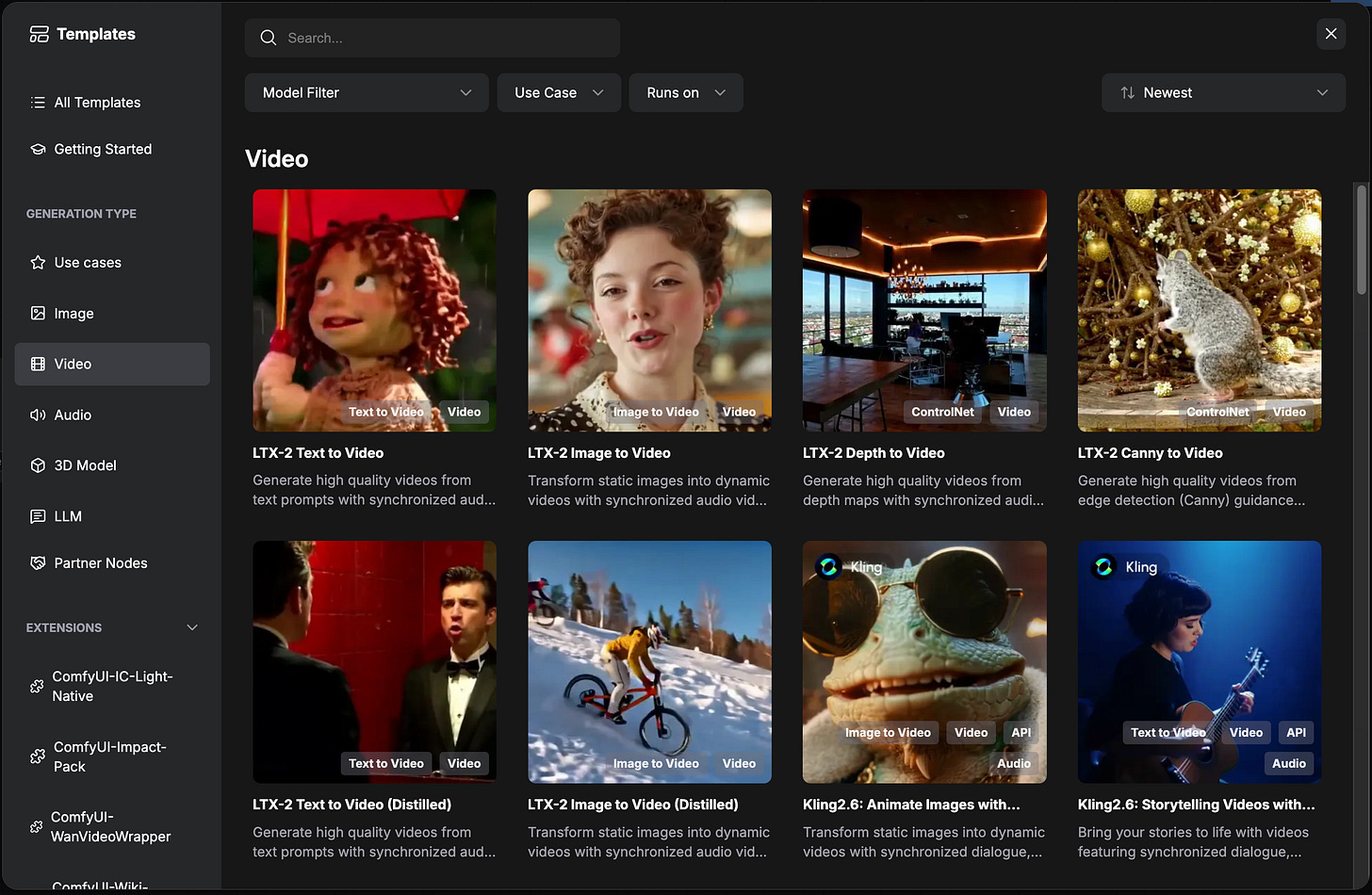

Go to the Template Library → Video → choose any LTX-2 workflow.

Follow the pop-up to download models, check all inputs, and run the workflow

Performance Optimization by NVIDIA

We partnered with NVIDIA and Lightricks to push local AI video forward.

NVFP4 and NVFP8 checkpoints are now available for LTX-2. And with NVIDIA-optimized ComfyUI, LTX-2 delivers cloud-class 4K video locally - up to 3X faster with 60% less VRAM using NVFP4.

Read more in this blog from NVIDIA or refer to the quick guide of running LTX-2 in ComfyUI with NVIDIA GPUs.

As always, enjoy creating!

I have this error : Expected all tensors to be on the same device, but got tensors is on cpu, different from other tensors on cuda:0 (when checking argument in method wrapper_CUDA_cat)

Error.

SamplerCustomAdvanced

mat1 and mat2 shapes cannot be multiplied (466816x1 and 128x3)