OmniGen2 Native Support in ComfyUI!

Hi Comfy community! As many of you might already know, OmniGen2 is natively supported in ComfyUI!

Developed by VectorSpaceLab team, OmniGen2 is a 7B parameter unified multimodal model that combines text-to-image generation, image editing, and multi-image composition in one powerful architecture.

Model Highlights

Text-to-Image Generation: Create high-quality images from text prompts

Instruction-guided Editing: Make precise edits with natural language commands

Multi-Image Composition: Combine elements from multiple images seamlessly

Text in Images: Generate clear text content within images

Visual Understanding: Powered by Qwen-VL-2.5 for superior image analysis

Get Started

Update ComfyUI or ComfyUI desktop

Visit our documentation

Follow the guide in our documentation to download models, workflows, and then run them.

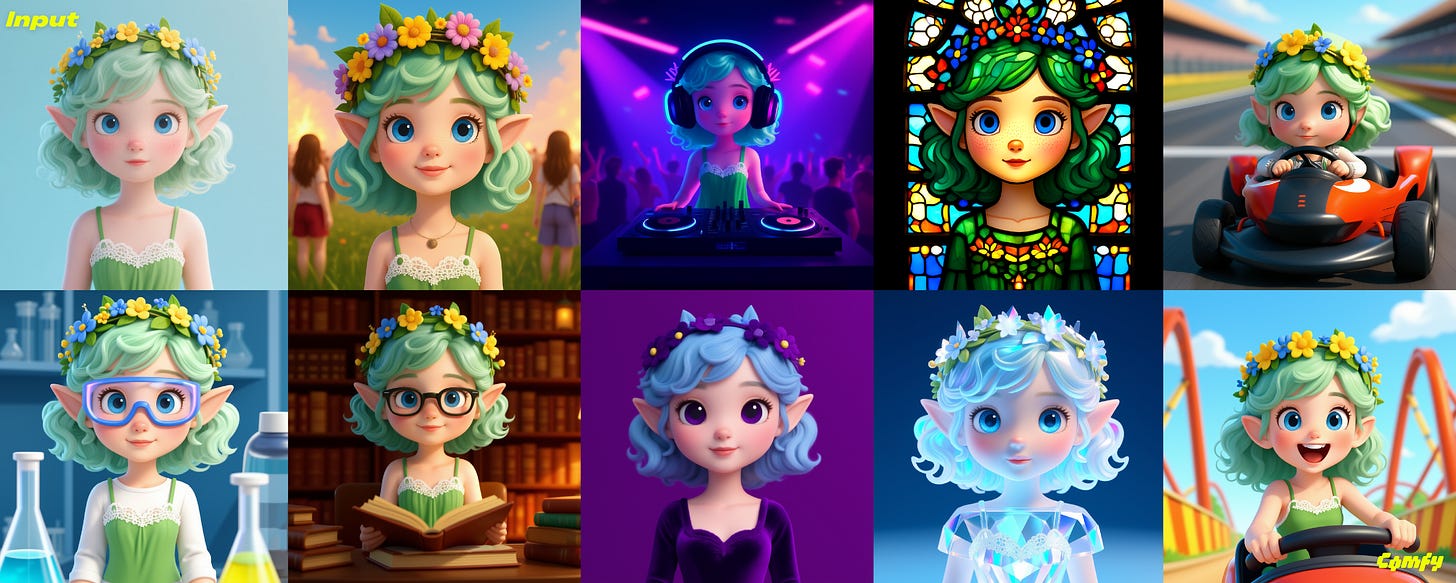

Example Outputs

Text-to-Image

Image Editing

Multiple input

Check our documentation for more details: docs.comfy.org/tutorials/image/omnigen/omnigen2

As always, enjoy creating!

Both of the sample workflows are running fine on a Mac.

runs like a charm on a 4090, and results seem a bit better than flux kontext