Running Hunyuan with 8GB VRAM and PixArt Model Support

Hope everyone had a good Christmas! Check out some of the good news from ComfyUI:

Running HunyuanVideo with 8GB VRAM

With the release of ComfyUI v0.3.10, we are excited to share that it is now possible to run the HunyuanVideo model on GPUs with only 8GB VRAM. With the temporal tiling support to video VAEs, which is exposed through the VAE Decode (tiled) or VAE Encode (tiled) nodes, we significantly reduced the VRAM requirement (previously 32GB) for running this cutting-edge video model.

What is Temporal Tiling

Temporal tiling is different from spacial tiling; spacial tiling is simple because things map very well, especially between latent and pixel space. For 8x spacial compression, this looks like: 1x1 latent → 8x8 pixels, 2x1 latent → 16x8 pixels, 4x4 latent → 32x32 pixels, and so on.

Temporally on the other hand for 4x compression: 1 latent → 1 frame, 2 latents → 5 frames, 3 latents → 9 frames, how it works is that each temporal latent dimension gets expanded to 4x except the last one.

Try Temporal Tiling for Low VRAM Consumption

To enable the temporal tiling configuration, you need to:

Update ComfyUI or ComfyUI Desktop to the latest

Use the updated example workflow here

In the “VAE Decode(Tiled)” Node, try to lower the tile_size, overlap, temporal_size, or temporal_overlap if you have memory of less than 32GB

Remember to select a fp8 weight_dtype in the “Load Diffusion Model" Node if you still run out of memory or want to accelerate the inference.

If you are new to Hunyuan Video, find our previous HunyuanVideo Support blog about how to get started.

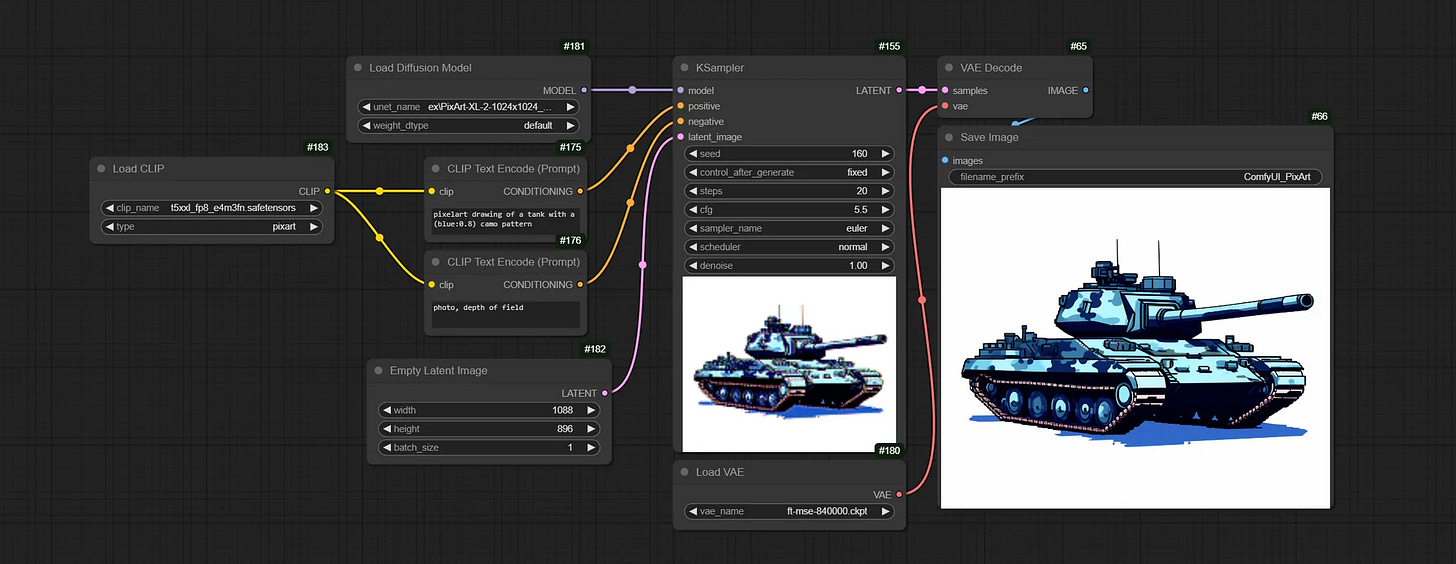

PixArt Native Model Support

In addition, we’ve also added support for PixArt models. Shout out to the contribution by community member @city96.

PixArt is a family of high-quality diffusion models for generating images. The new support includes both Alpha and Sigma models, which come in different resolutions (1024x1024 and 512x512) and formats, as well as pre-trained weights.

Try the PixArt Native Workflow

Update ComfyUI to the latest

Download the following model files:

Alpha weights PixArt-XL-2-1024-MS or Sigma weights PixArt-Sigma-XL-2-1024-MS → Place in

ComfyUI/models/diffusion_models(You can also switch to other PixArt Alpha/Sigma weights)

Any T5XXL model from here → Place in

ComfyUI/models/text_encodersDownload SD1.5 VAE for Alpha, SDXL VAE for Sigma →

ComfyUI/models/vae

Try the example workflow: PixArtSampleWorkflow.json

ComfyUI Bi-weekly Office Hours

We have started our office hour sessions every two weeks on Fridays. The second one will be on Jan 3rd, 4-5pm PST!

Follow this event in our Discord: https://discord.gg/wSt4sjcg?event=1319396254760960041

We will give an AMA, chat with a special guest, and get feedback on the recent desktop experience. If you want to ask any questions, feel free to jump in the live chat or write them on our forum AMA section: https://forum.comfy.org/c/ama/11

Also, please let us know if you wants to share workflows or give any presentations. Hope to see you all!

MLLM 文本编码器,这个该如何添加并使用呢?

MLLM text encoder, how to add and use it?