Wan2.2 Day-0 Support in ComfyUI

The WAN team has officially released the open source version of Wan2.2! We are excited to announce the Day-0 native support for Wan2.2 in ComfyUI!

The model consists of high-noise and low-noise expert models, which are divided according to denoising timesteps, resulting in higher-quality video content.

The model is fully open-sourced under the Apache 2.0 license, enabling commercial use.

Highlights of the Wan2.2

MoE Expert Model Architecture: High-noise experts handle overall layout, while low-noise experts refine details.

Cinematic Aesthetic Control: Professional camera language, supporting multi-dimensional visual controls such as lighting, color, and composition.

Large-scale Complex Motion: Smoothly reproduces various complex motions, enhancing controllability and naturalness of movement.

Precise Semantic Adherence: Understands complex scenes, generates multiple objects, and better restores creative intent.

Efficient Compression Technology: Compared to version 2.1, data is greatly upgraded; the 5B version features high-compression VAE and optimized VRAM usage.

Available Models in ComfyUI

Wan2.2-TI2V-5B: Text/Image to video, FP16

Wan2.2-I2V-A14B: Images to video, FP16/FP8

Wan2.2-T2V-A14B: Text to video, FP16/FP8

Get Started

Update ComfyUI or ComfyUI Desktop to the latest version

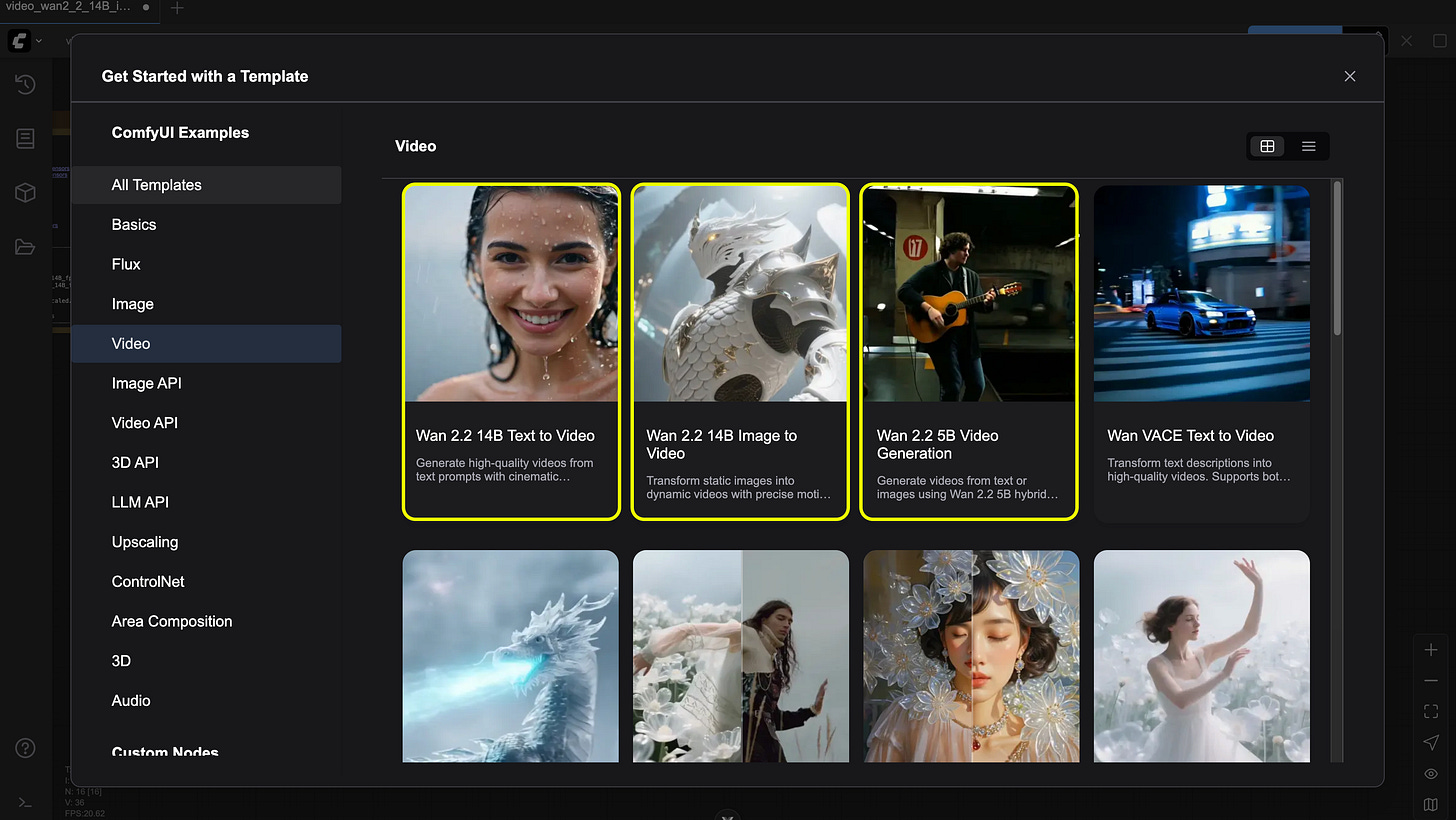

Go to Workflow → Browse Templates → Video

Select "Wan 2.2 Text to Video", "Wan 2.2 Image to Video", or "Wan 2.2 5B Video Generation"

Download the model as guided by the pop-up

Click and run any templates!

Example Outputs

Master Every Light

Professional Camera Language

Advanced Camera Movement

Capture Every Emotion

Complex Motion, Natural Flow

Unlimited Artistic Expression

Content creation @Yo9oTatara @SyntaxDiffusion @PurzBeats @ComfyUIWiki

Check our documentation for more details: https://docs.comfy.org/tutorials/video/wan/wan2_2

As always, enjoy creating!

I updated ComfyUI 1 minute ago, Wan2.2 does not appear in the templates.

Great to see Wan2.2 getting Day-0 support in ComfyUI! The high-quality video features sound amazing. For anyone wanting to try this out easily, I've had a smooth experience with the Wan AI (https://towan.net/) platform for all my AI video projects.